A new report has revealed that DeepSeek spent much more money on training its R1 AI model than it originally claimed.

When DeepSeek announced its R1 model, it shook the tech world by stating that it cost only $6 million to develop.

Many were surprised because the model was said to be as advanced as OpenAI’s top-tier AI.

The news caused chaos in the stock market, wiping out around $1 trillion, with NVIDIA alone losing $600 billion in value.

For comparison, OpenAI’s GPT-4 is believed to have cost between $100 million and $200 million to train.

However, new research from SemiAnalysis suggests that DeepSeek’s actual spending was far higher than $6 million.

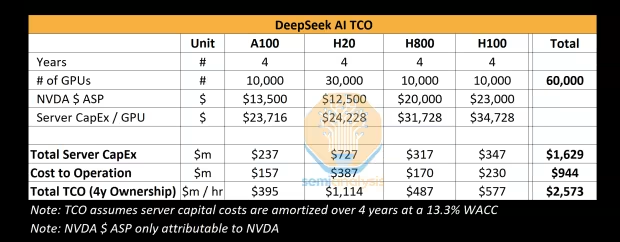

According to the report, the company purchased 10,000 NVIDIA A100 GPUs in 2021. Later, it added 10,000 China-specific NVIDIA H8000 AI GPUs and another 10,000 NVIDIA H100 GPUs.

Considering the cost of these GPUs and the expenses involved in training the model, DeepSeek’s total capital investment is estimated at around $1.6 billion.

Additionally, the company is believed to have ongoing operational costs of nearly $944 million.

This new information changes the earlier perception that AI model training was becoming significantly cheaper.

Instead, it shows that developing high-performance AI still requires massive financial resources.