If you’re looking for complete control over your AI model’s data, security, and performance, running it locally is the best choice.

DeepSeek R1 is an open-source AI model designed for conversations, coding, and problem-solving.

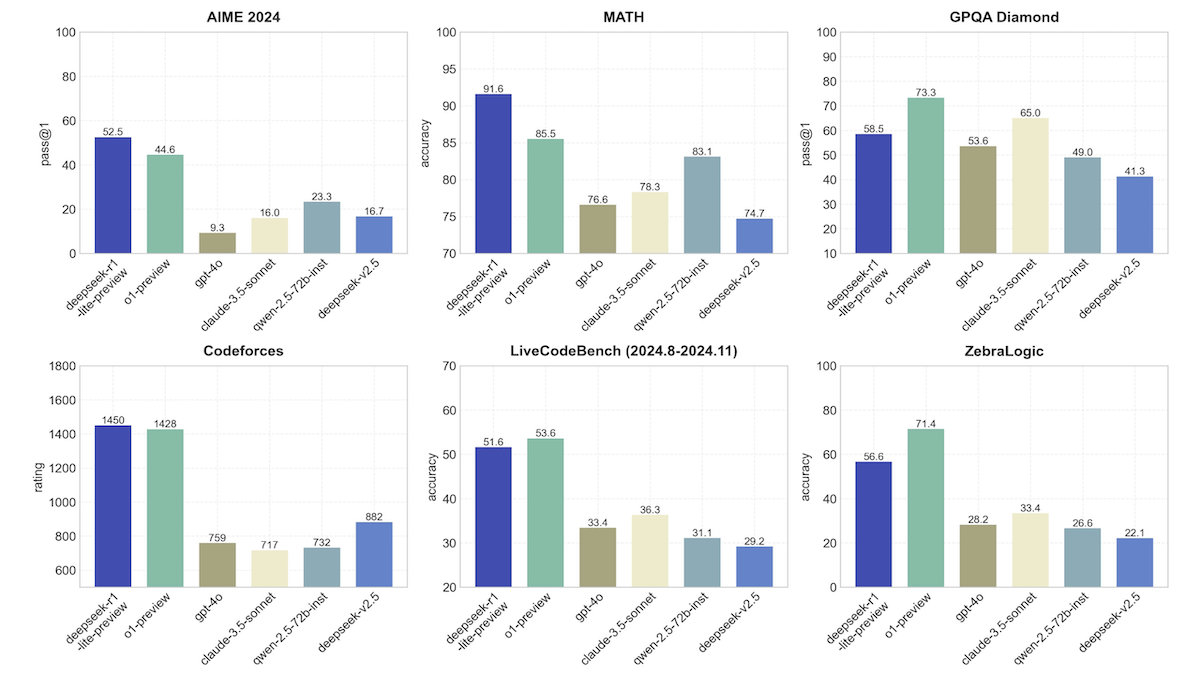

It has even outperformed OpenAI’s o1 model in multiple tests.

In this guide, I will show you how to install and run DeepSeek R1 on your computer simply and easily.

Why Choose DeepSeek R1?

DeepSeek R1 is perfect for those who need:

- Conversational AI – Engages in natural, human-like discussions.

- Coding Assistance – Helps generate and refine code effortlessly.

- Problem-Solving – Can tackle math equations, logic problems, and more.

The best part?

Running it locally means faster responses and better privacy since no data is sent to external servers.

How to Run DeepSeek R1 Locally Using Ollama

Using Ollama is the easiest way to run DeepSeek R1 Locally on your computer. Just follow these steps and you will able to Run DeepSeek R1 on your PC:

What is Ollama?

Ollama is a tool that lets you run AI models directly on your computer. It simplifies AI deployment by offering:

- Support for many AI models – Including DeepSeek R1.

- Cross-platform compatibility – Works on macOS, Windows, and Linux.

- Easy setup & performance – Simple commands and efficient resource usage.

Why Use Ollama?

- Quick Installation – Get up and running in no time.

- Full Privacy – Everything runs on your machine, keeping your data safe.

- Flexible AI Model Switching – Easily switch between different AI models as needed.

Step-by-Step Guide to Installing and Running DeepSeek R1 Locally

1. Install Ollama

Visit the Ollama website for detailed instructions.

If you’re using macOS, install it with Homebrew:

brew install ollama

For Windows and Linux, follow the steps provided on the Ollama website.

2. Download DeepSeek R1

Once Ollama is installed, download the DeepSeek R1 model by running:

ollama pull deepseek-r1

If you want a smaller version (like 1.5B, 7B, or 14B), use this command:

ollama pull deepseek-r1:1.5b

3. Start the Ollama Server

In a new terminal window, run:

ollama serve

4. Run DeepSeek R1

Now you can start using the AI model:

ollama run deepseek-r1

To use a specific version:

ollama run deepseek-r1:1.5b

You can also ask a direct question like:

ollama run deepseek-r1:1.5b "What are the latest trends in Rust programming?"

Chat

What’s the latest news on Rust programming language trends?Coding

How do I write a regular expression for email validation?Math

Simplify this equation: 3x2 + 5x - 2.What Can DeepSeek R1 Do?

DeepSeek R1 is designed to help with:

- Conversational AI – Engage in human-like discussions.

- Coding Assistance – Generate and refine code.

- Problem-Solving – Solve math equations and complex problems.

Running it locally means faster responses and full data privacy, with no need for internet access.

Smaller, Faster AI Models

DeepSeek R1 comes in different sizes. The distilled models (like 1.5B, 7B, and 8B) offer:

- Lower hardware requirements – Can run on less powerful machines.

- Faster responses – Great for real-time coding help.

- Strong performance – Smaller but still delivers great reasoning ability.

Practical usage of DeepSeek R1

Automate Commands with a Script

If you use DeepSeek R1 often, create a shortcut script:

#!/usr/bin/env bash

PROMPT="$*"

ollama run deepseek-r1:7b "$PROMPT"

Now you can quickly ask AI questions by running:

./ask-deepseek.sh "How do I write a regex for email validation?"

IDE integration and command line tools

Many IDEs allow you to configure external tools or run tasks.

You can set up an action that prompts DeepSeek R1 for code generation or refactoring and inserts the returned snippet directly into your editor window.

Open source tools like mods provide excellent interfaces to local and cloud-based LLMs.

Frequently Asked Questions (FAQs)

Which version of DeepSeek R1 should I use?

If you have a powerful computer, go for the full DeepSeek R1 model. If you need something lighter, use a distilled version like 1.5B or 14B.

Can I run DeepSeek R1 on cloud servers or Docker?

Yes! As long as Ollama can be installed, you can run DeepSeek R1 on cloud servers, virtual machines, or even inside a Docker container.

Can I fine-tune DeepSeek R1?

Yes. The models allow modifications and customization. Check the licensing details before making changes.

Is DeepSeek R1 free for commercial use?

Yes! Most DeepSeek R1 models are MIT-licensed, while some variants are under Apache 2.0. Always review the license terms before using them commercially.

Does DeepSeek R1 require an internet connection to work?

No. Once installed, DeepSeek R1 runs entirely on your local machine, meaning you don’t need an internet connection to use it.

What are the system requirements to run DeepSeek R1?

The requirements depend on the model size. The full model needs a powerful GPU with at least 16GB VRAM, while the smaller versions (1.5B, 7B) can run on lower-end hardware with 8GB+ RAM.

Does DeepSeek R1 support multiple languages?

Yes, but its strongest capabilities are in English and some widely spoken languages. Performance may vary for less common language

Can DeepSeek R1 be integrated into web applications?

Absolutely! You can use APIs or custom scripts to integrate it into chatbots, web apps, or AI-powered tools.

How does DeepSeek R1 compare to GPT models?

DeepSeek R1 is designed for on-device execution, making it more private and responsive than cloud-based models like GPT. While it may not match GPT-4 in complexity, it offers solid performance for conversational AI, coding help, and reasoning tasks.

How does DeepSeek R1 handle privacy?

Since it runs locally, no data is sent to external servers, ensuring maximum privacy. This makes it a great option for sensitive applications.

Can DeepSeek R1 generate images or videos?

No, DeepSeek R1 is a text-based model optimized for chat, coding, and problem-solving. If you need AI-generated images, consider models like Stable Diffusion or DALL·E.

Final Thoughts

DeepSeek R1 is a powerful AI model that you can run locally for privacy, performance, and customization.

Whether you need help with coding, problem-solving, or conversations, this open-source model offers flexibility and top-tier AI capabilities.

Try it out today and see how it enhances your workflow!