Qwen is a family of advanced artificial intelligence language models developed by Alibaba Cloud.

These models are designed to understand and generate human-like text. One of the most crucial aspects of any AI model is its sequence length, which determines how much text it can process at one time.

A longer sequence length allows the model to handle more complex and extended conversations, making it highly effective for applications like document processing, chatbots, and research analysis.

Over time, Qwen models have improved their sequence length capabilities, allowing them to process significantly more text in a single run.

But how far can they go? Let’s dive into the details.

What is Sequence Length in AI Models?

In AI language models, sequence length refers to the maximum number of tokens (words, characters, or subwords) a model can process in a single input.

Simply put, the higher the sequence length, the more information the model can consider at once, leading to better context retention and more accurate responses.

For example, a model with a short sequence length might struggle to remember the beginning of a long paragraph, while a model with a longer sequence length can understand and generate coherent responses for extended texts.

Evolution of Sequence Length in QWEN Models

Alibaba Cloud has continuously improved the Qwen models to support longer sequences, making them more efficient for large-scale text analysis.

Let’s take a look at how different versions of Qwen handle sequence length:

Qwen-14B – The Early Version

The Qwen-14B model was one of the earlier versions and had a relatively limited sequence length.

While effective for standard text generation and conversations, it wasn’t optimized for handling lengthy documents or complex multi-turn dialogues.

Qwen2.5 – A Major Upgrade

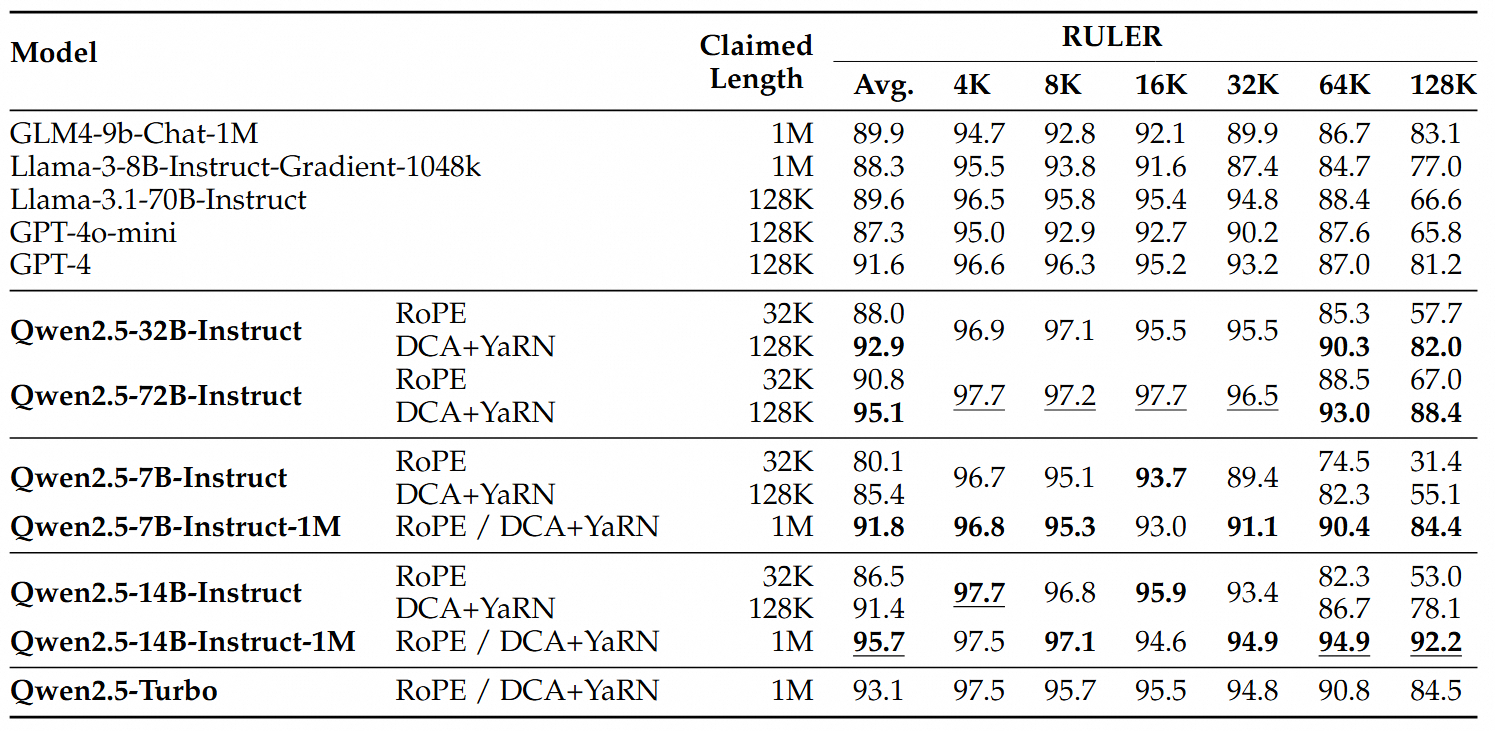

With the introduction of Qwen2.5, the maximum sequence length was significantly increased to 32,768 tokens.

This upgrade made Qwen much more effective for long-form conversations, document summarization, and detailed content analysis.

This version became a strong choice for businesses and developers needing advanced AI models for extensive text processing.

Qwen2.5-1M – The Game Changer

The latest and most advanced version, Qwen2.5-1M, takes things to the next level.

This version supports an incredible maximum sequence length of 1 million tokens using a special technology called Dual Chunk Attention.

This breakthrough allows the model to handle massive amounts of text in one go, making it suitable for tasks like:

-

Processing entire books and research papers.

-

Handling long legal or technical documents.

-

Understanding multi-turn conversations with deep context awareness.

Why Does Sequence Length Matter?

The sequence length of an AI model impacts how well it can process and understand text. Here’s why a longer sequence length is beneficial:

1) Better Context Retention

A longer sequence length helps the AI remember more details from earlier in the text.

This is crucial for chatbots, virtual assistants, and document analysis tools that need to maintain context over long discussions or multiple pages of content.

2) Improved Accuracy

When an AI model can process more information at once, it reduces the chances of misunderstanding the context.

This results in more relevant and precise responses, whether for customer support, academic research, or creative writing.

3) Greater Flexibility

Different applications require different sequence lengths.

While short sequence lengths work for simple conversations, longer sequence lengths make it possible to analyze and summarize lengthy documents, generate comprehensive reports, and handle large datasets.

How to Adjust the Sequence Length in QWEN?

If you’re using a Qwen model, you can manually adjust the sequence length based on your needs.

This is done using the --model_max_length argument when configuring the model.

However, keep in mind that longer sequence lengths require more computational power and memory, so balancing performance and efficiency is essential.

Conclusion

Qwen has made impressive strides in increasing sequence length capabilities, making it one of the most powerful AI language models available today.

-

Qwen-14B had a limited sequence length, suitable for basic conversations and text generation.

-

Qwen2.5 introduced a 32,768-token sequence length, allowing for longer and more detailed responses.

-

Qwen2.5-1M broke all records by supporting 1 million tokens, enabling the processing of entire books, research papers, and complex conversations.

With these advancements, Qwen models provide users with unmatched flexibility and performance, catering to a wide range of industries, from business and education to research and AI development.

If you’re looking for a powerful AI model to handle long-form text efficiently, Qwen2.5-1M is undoubtedly a game-changer!